Statistical methods and information theory

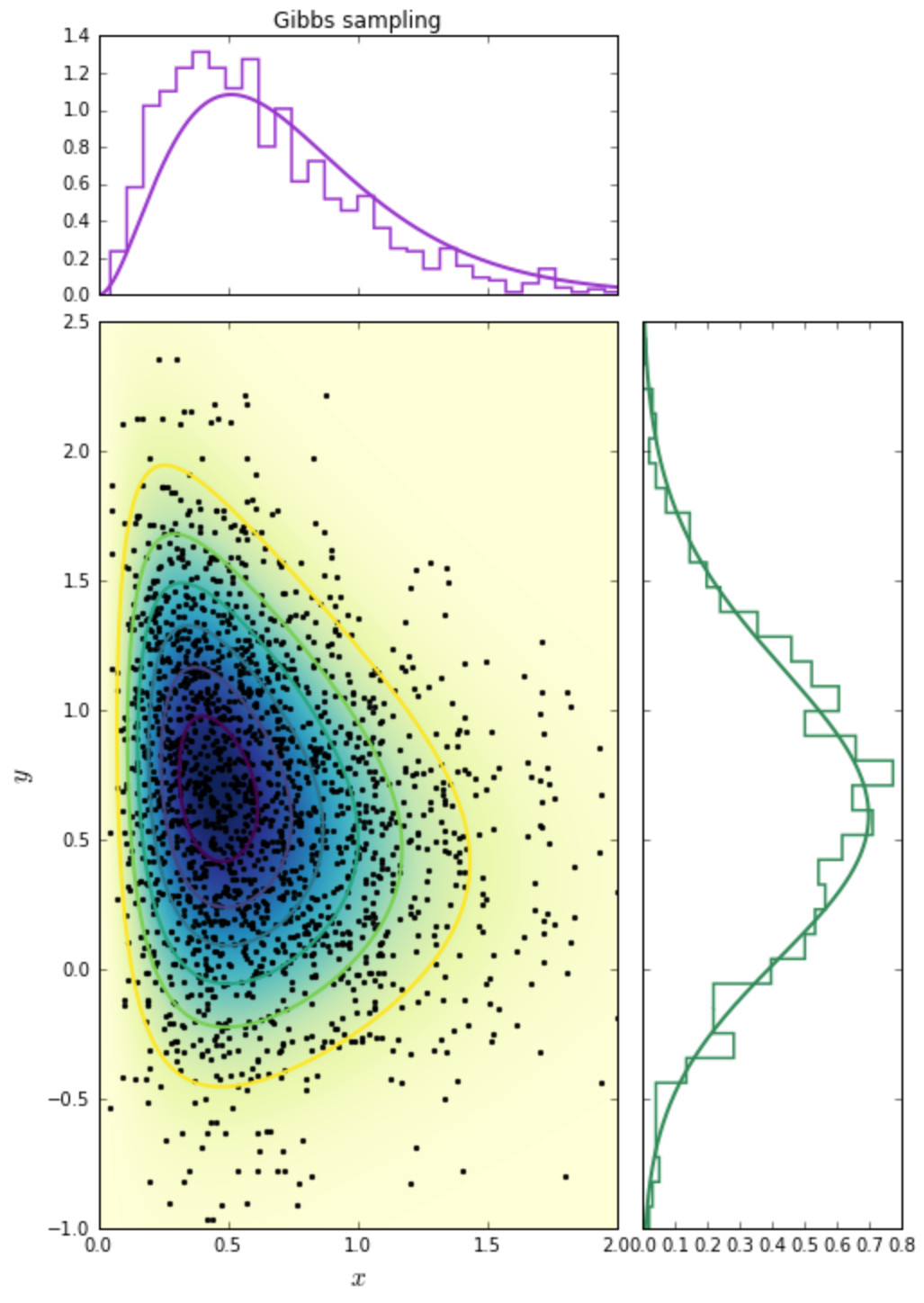

Gibbs sampling in two dimensions. Figure from my lectures on GitHub.

Gibbs sampling in two dimensions. Figure from my lectures on GitHub.

My research focuses on inference, which means making the connection between theoretical models and experimental data in order to learn new things about the Universe. My specific interests centre on developing and applying advanced statistical and numerical methods for extracting information from data, in a variety of problems in cosmology.

In particular, I employ Bayesian hierarchical modelling (a complete and coherent formalism for situations when information is available on several different levels) as a practical tool for exploring complex problems and designing solutions. I develop and run codes for knowledge discovery in high-dimensional domains (up to several millions), on complex "big data" sets (hundreds of thousands of points).

Also, while Bayesian methods are commonly associated with big data, I also use them for inference in the opposite regime, i.e. for problems for which we possess little or no data. In these small signal-to-noise situations, expert modelling is critical and serves as a basis for robust reasoning under uncertainty.

Information propagation in cosmic web analysis. The video shows the evolution of the entropy of the web-type posterior, from primordial times to the present conditions. The entropy quantifies the information content of the posterior, which results from fusing the information from the prior and the data constraints.

Extracting knowledge from data also brings a natural connection with information theory, the field that studies the quantification, transmission and storage of information. In a cosmological context (and especially in cosmic web analyses), I use information-theoretic measures to quantify the amount of uncertainty in probability distributions and in the outcome of random processes.

In practice, numerical computations often require the use of high-performance computing with highly-efficient, parallel codes for the mapping of parameter spaces. Data visualisation, to communicate results and uncover new insights, is another important element of my work.

These new analytical, statistical and computational methods are needed both to sharpen up theoretical predictions and to enable us to exploit the full potential of present and future data sets to constrain them.